A UX approach to secure and explainable AI access across enterprise environments.

Role

Founding UX Designer

Industry

Data Governance

Timeline

9 Months

Team

CEO & Engineering Team (Director, Founding, AI/ML, Front-End)

At Codified, I faced a fascinating design challenge to help CISOs at companies like JP Morgan confidently say “yes” to AI innovation.

.png)

The reality of enterprise and early stage design is that you don't learn everything upfront. At Codified too, our most important insights emerged only after customers started giving us feedback on what we built.

Through 50+ customer conversations across 9 months, we learned that enterprise AI adoption is a challenge of confidence and trust that can only be solved through iterative learning.

.png)

.png)

Oversharing & Permission Discipline Issues

Files and folders have been shared too broadly over time, creating security risks when AI systems access this data.

.png)

Classification and Categorization Nightmare

Existing tools can't properly categorize and classify sensitive data, making rule-setting impossible.

.png)

AI Deployment Blocked by Security Concerns

Companies want to deploy AI tools but security teams are blocking rollouts due to data access concerns.

.png)

Manual Permission Management is Unsustainable

Current solutions require manual review and fixing of permissions, which doesn't scale.

.png)

Lack of Real-Time Visibility & Auditing

Companies can't see what data their AI systems are accessing in real-time or audit access patterns.

.png)

Data security posture management (DSPM)and Data loss prevention (DLPs) tools only say "there's smoke somewhere" but don't tell you where the fire is, how bad it is and what to do about it.

These tools are good at pattern matching, scanning documents to find SSNs, credit cards, or other sensitive data. But they stop there, leaving security teams with more questions to answer:

Manual Workarounds Don't Scale.

Multiple customers mentioned they created Personas and Data Owners (who go fix permissions) and then stress test with adverse prompts

Core MVP Features (initial attempt at solving the identified gaps)

First critical step. Without connected data sources, there’s no way to evaluate or manage AI data access

We thought intelligent classification by sensitivity, domain, and datasource would provide the missing context

Teams needed clear visibility into who could access what data

Teams needed a way to define and test AI data access policies upfront.

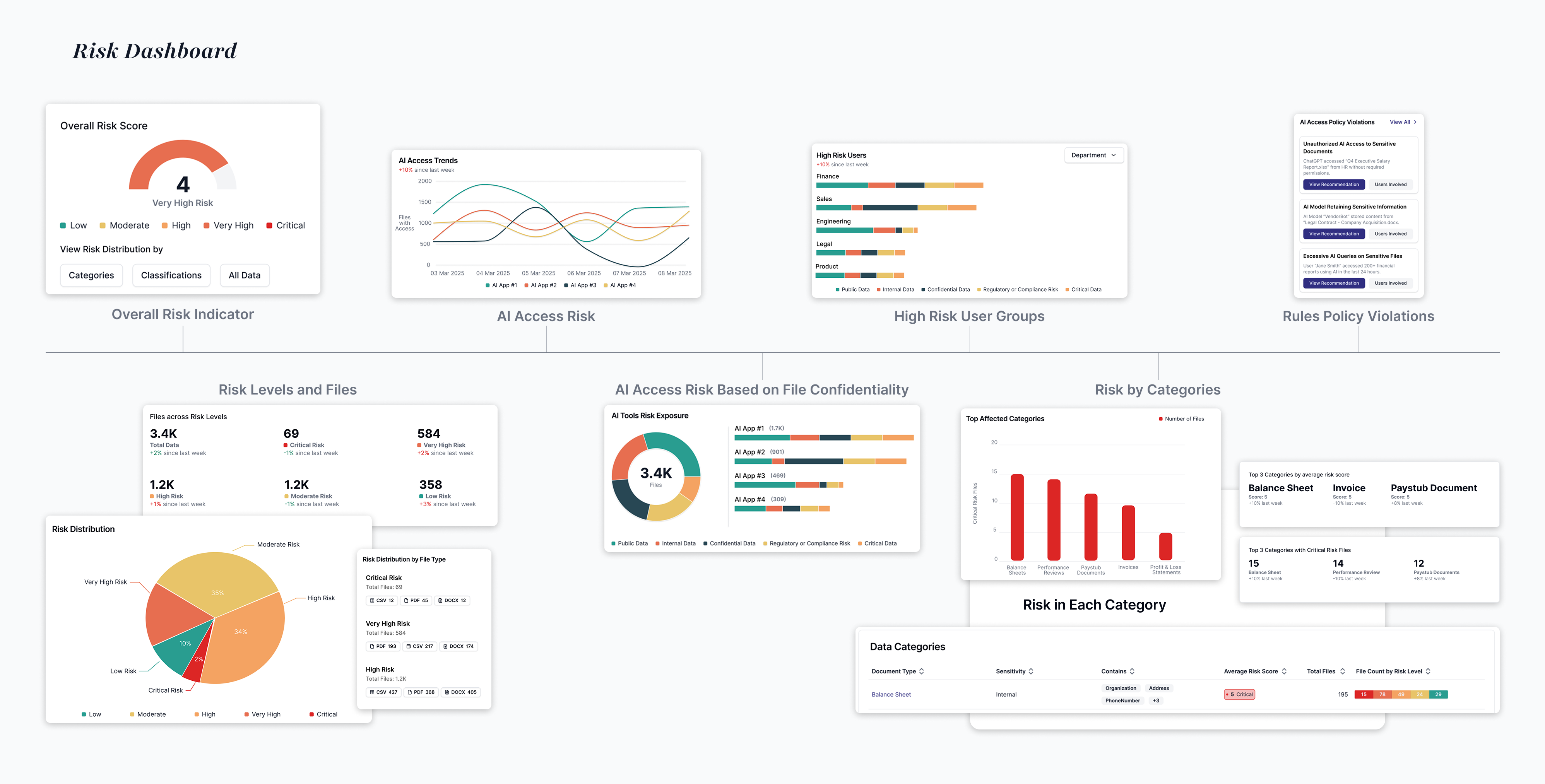

Policy violations and risk breakdowns needed intuitive visual representation

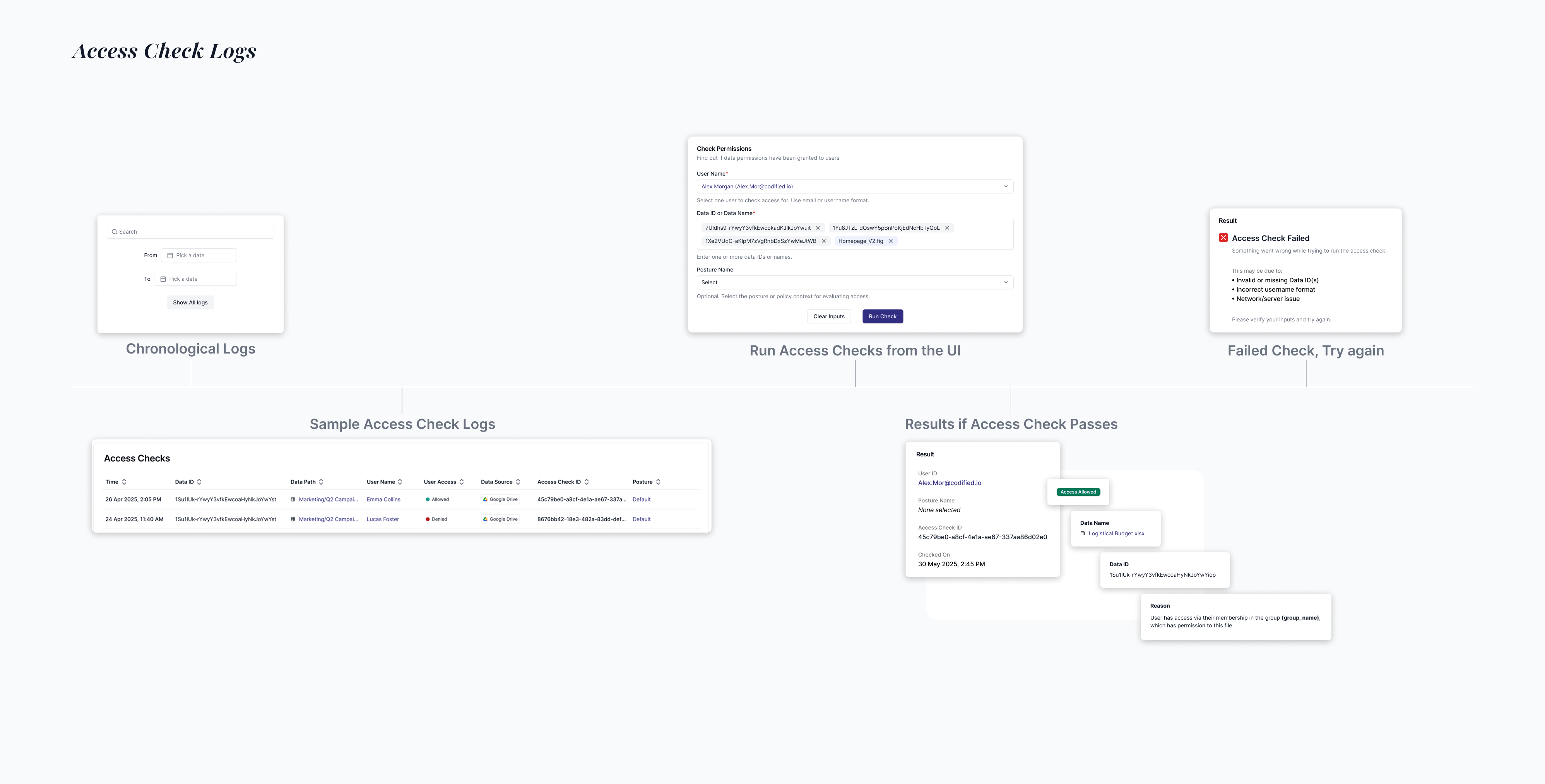

We thought basic logging of API permission checks would provide the audit trail teams needed

"This is helpful, but you're missing something fundamental. Every organization thinks about data differently. Our 'Sensitive' isn't the same as JP Morgan's 'Sensitive.' We need to label data by our business structure, not generic categories."

.png)

Customer Feedback Pattern

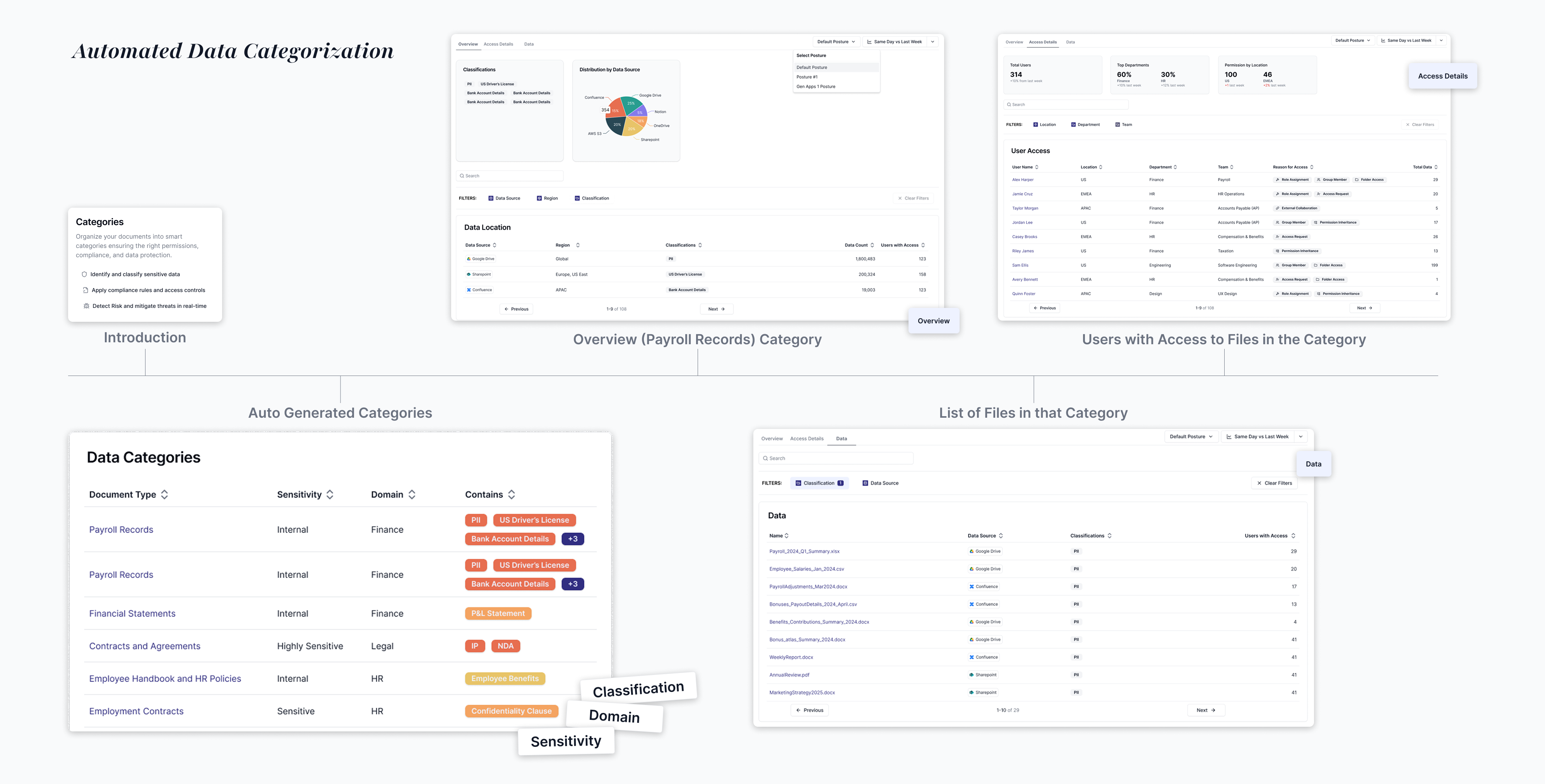

As customers began trying our categorization and classification system, consistent feedback emerged:

"We need to label data by our business structure, not generic categories"

"Our legal team has different sensitivity requirements than our product team"

"Can we tag things as 'board-level confidential' or 'customer-facing approved'?"

Context matters more than accuracy. Generic categorization and classification, no matter how sophisticated, doesn't match enterprise mental models.

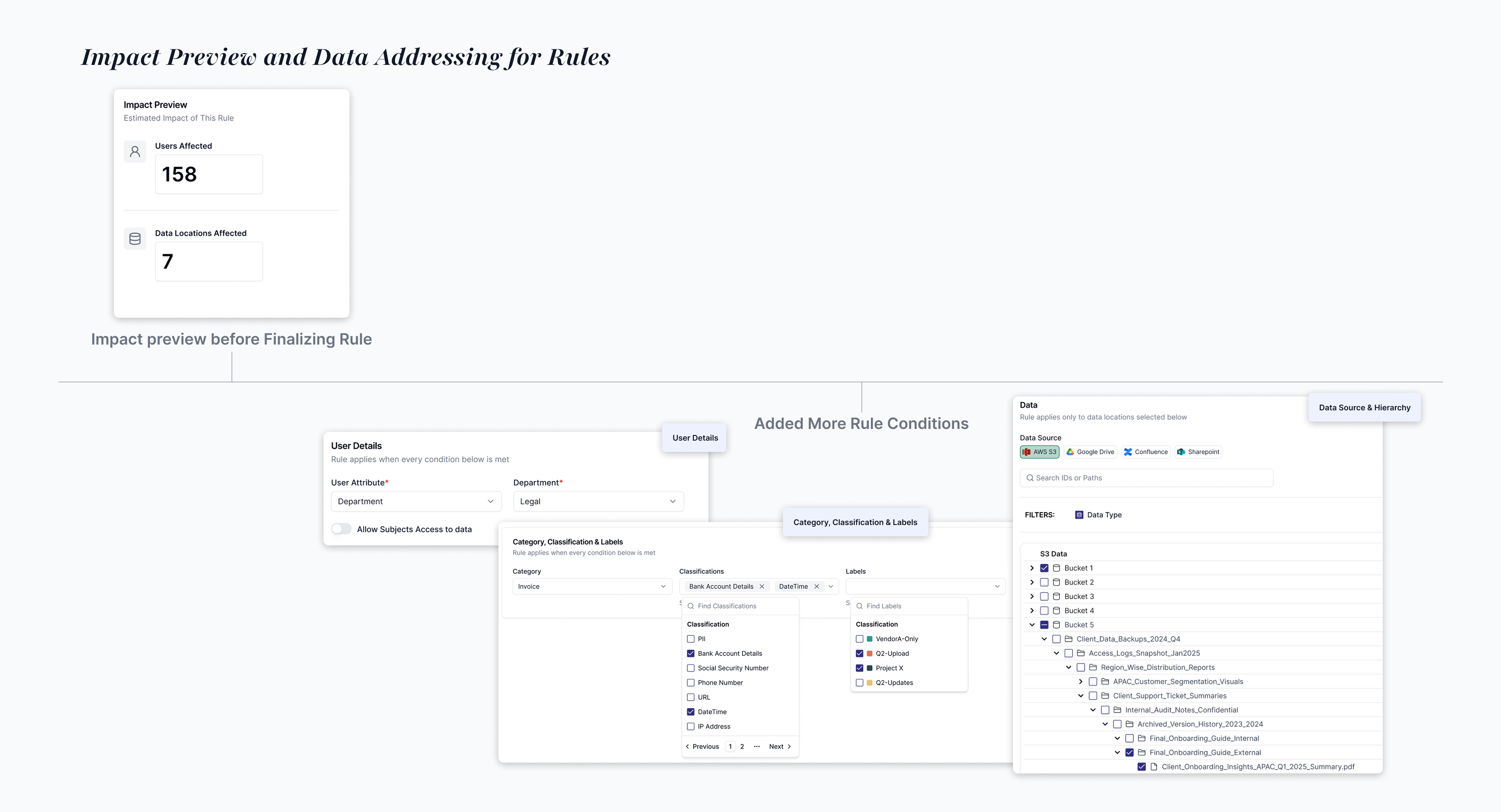

"We're worried about actually SETTING UP RULES. What if we accidentally block the entire finance team from their own data?We need to know BEFORE we implement what will break.”

.png)

The Fear Pattern

Customer after customer expressed the same anxiety about our rules engine:

"We need to see exactly which files and users will be affected, before we hit apply."

"What if this rule ends up blocking access to the wrong folders?"

"Our data lives in layers. How do we know what part of the hierarchy this rule actually touches?"

Visibility means nothing if users are afraid to act on it.

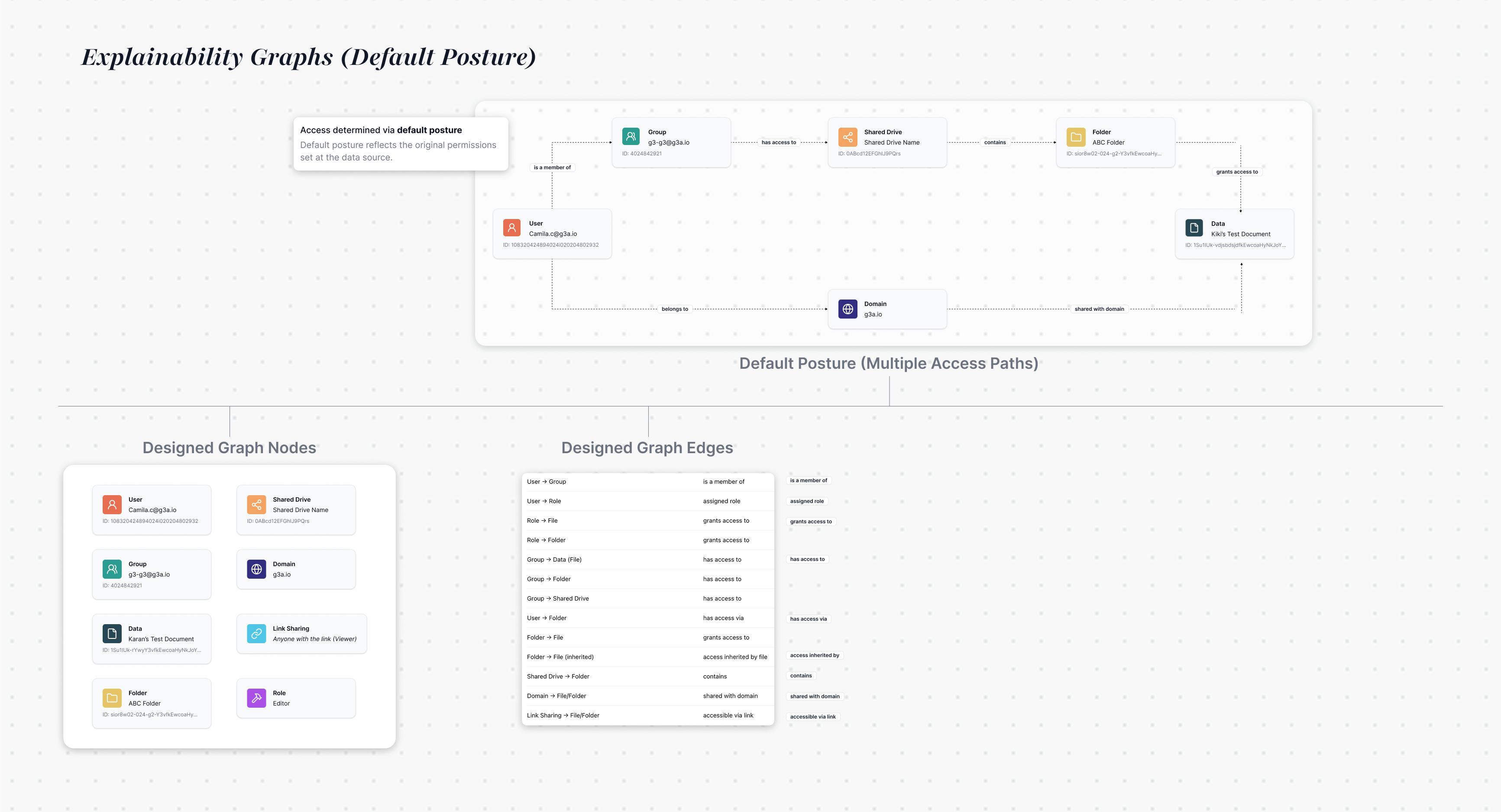

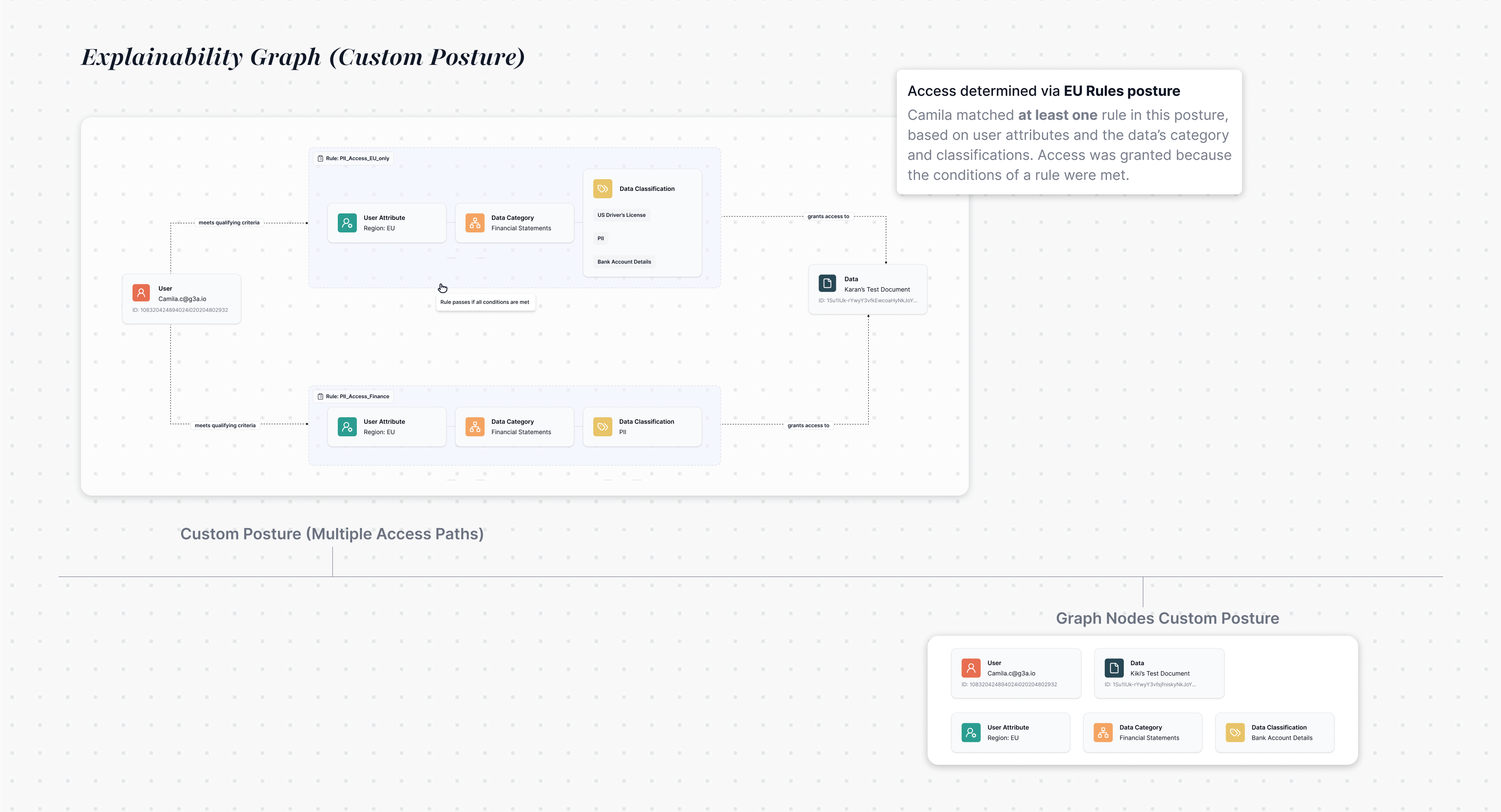

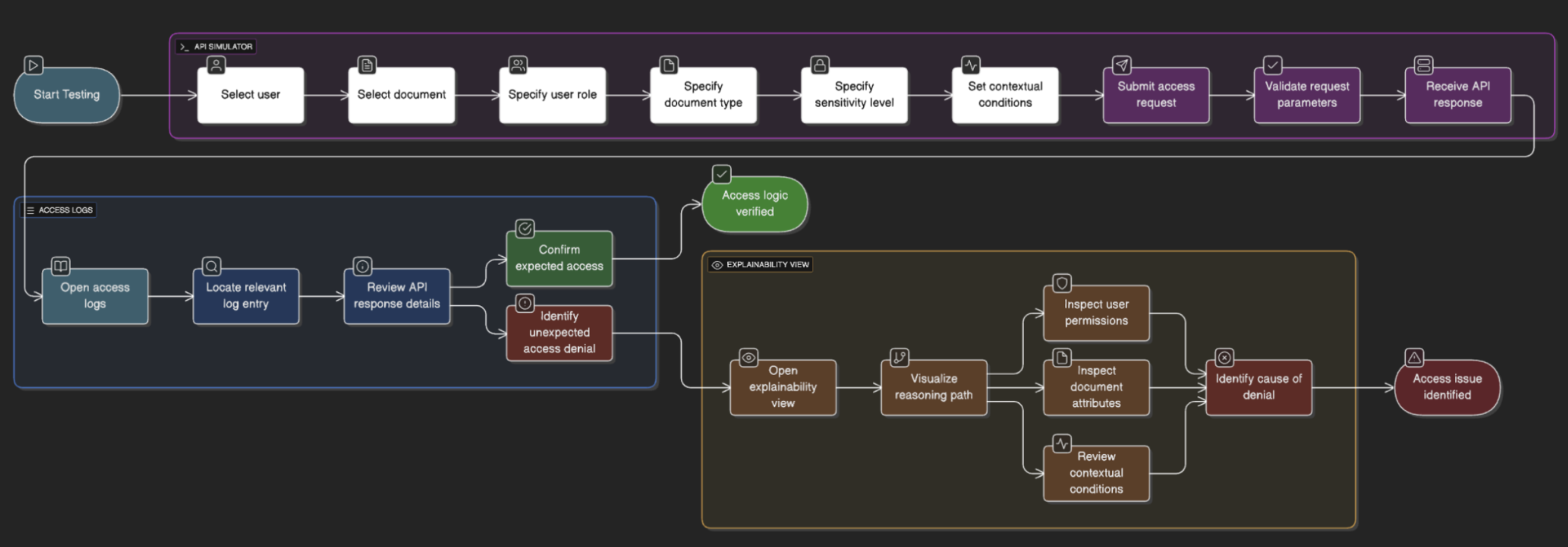

"I can see someone got access to sensitive data, but I have no idea why. What rule allowed this? Did we mess something up? If this ever leaks, I won’t just need logs.. I’ll need a story I can stand behind."

.png)

The Pattern Behind Every Panic Call

When unexpected access approvals occurred, teams needed to:

Understand the reasoning: Why was this access granted?

Verify policy accuracy: Did we configure our rules correctly?Defend to stakeholders: How do we explain this to execs or auditors?

Take corrective action: Can we fix this before it happens again?

Unexplained approvals create more panic than denials. Teams fear of silently overexposed sensitive data without a clear trail of justification.

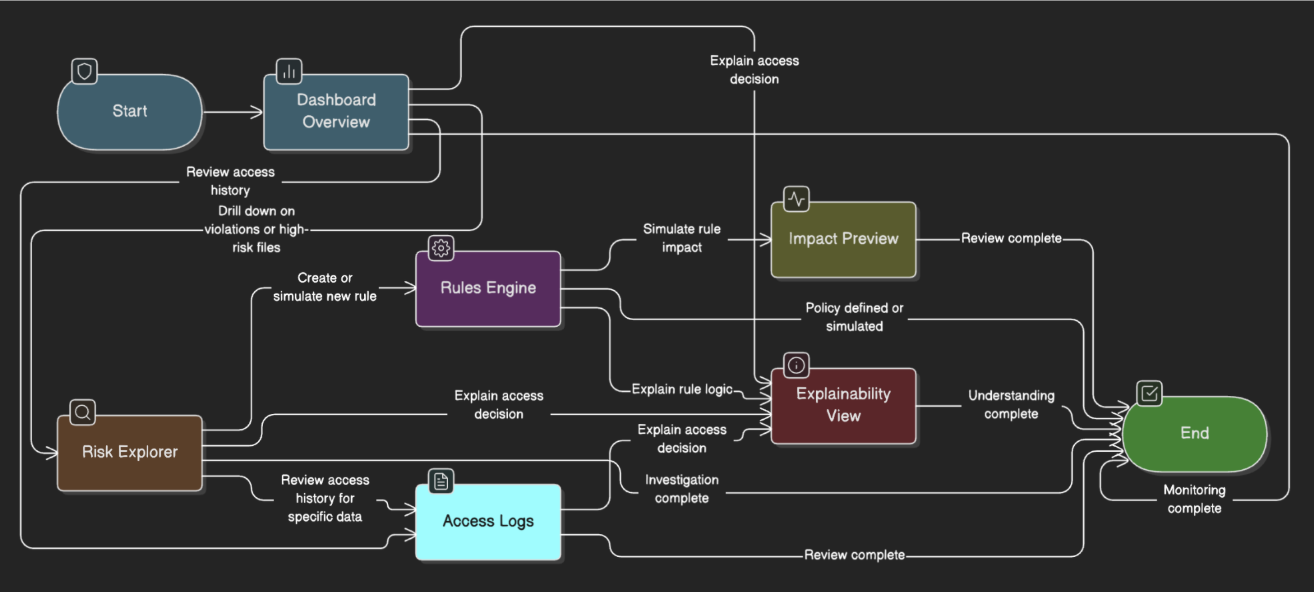

The Challenge

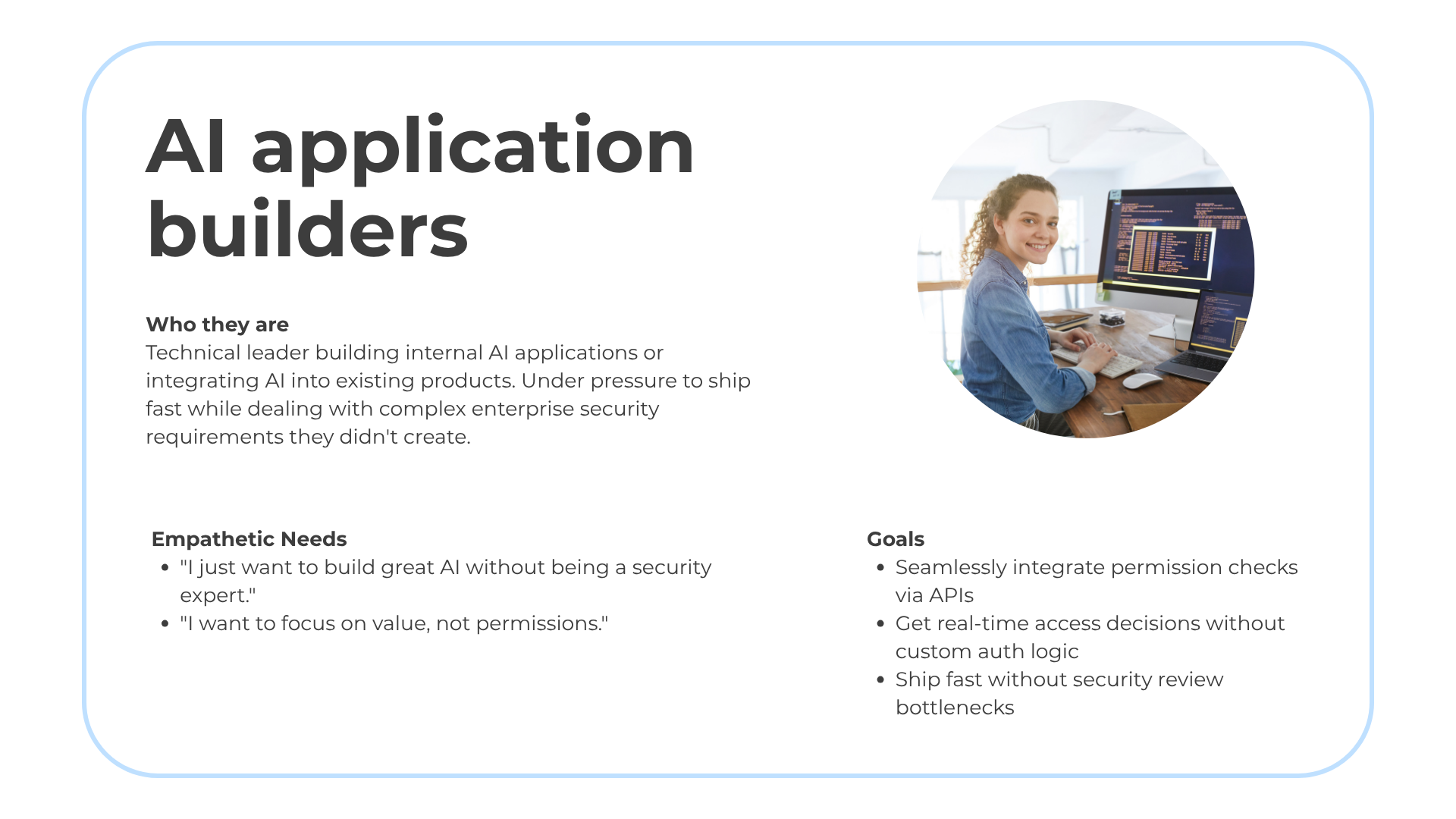

How do you organize 8 interconnected systems into coherent conceptual models for two very different user types?

.png)

Goal

Monitor data access risk, define policies, and simulate impact before enforcing.

Goal

Test and verify access logic during app development.

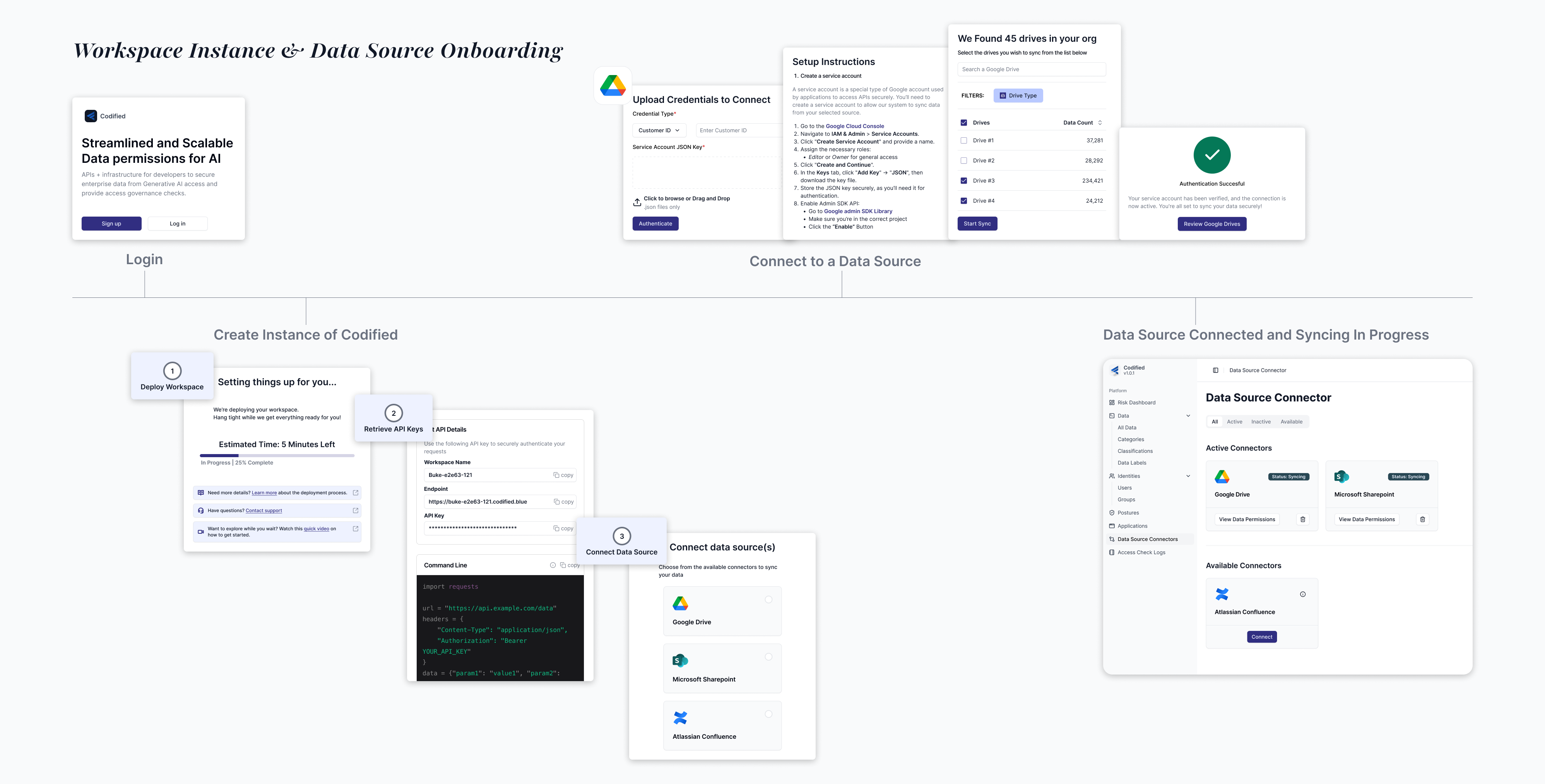

Security teams can add, monitor, and manage data sources without extensive IT involvement. This removed the months-long integration cycles that typically delayed AI projects.

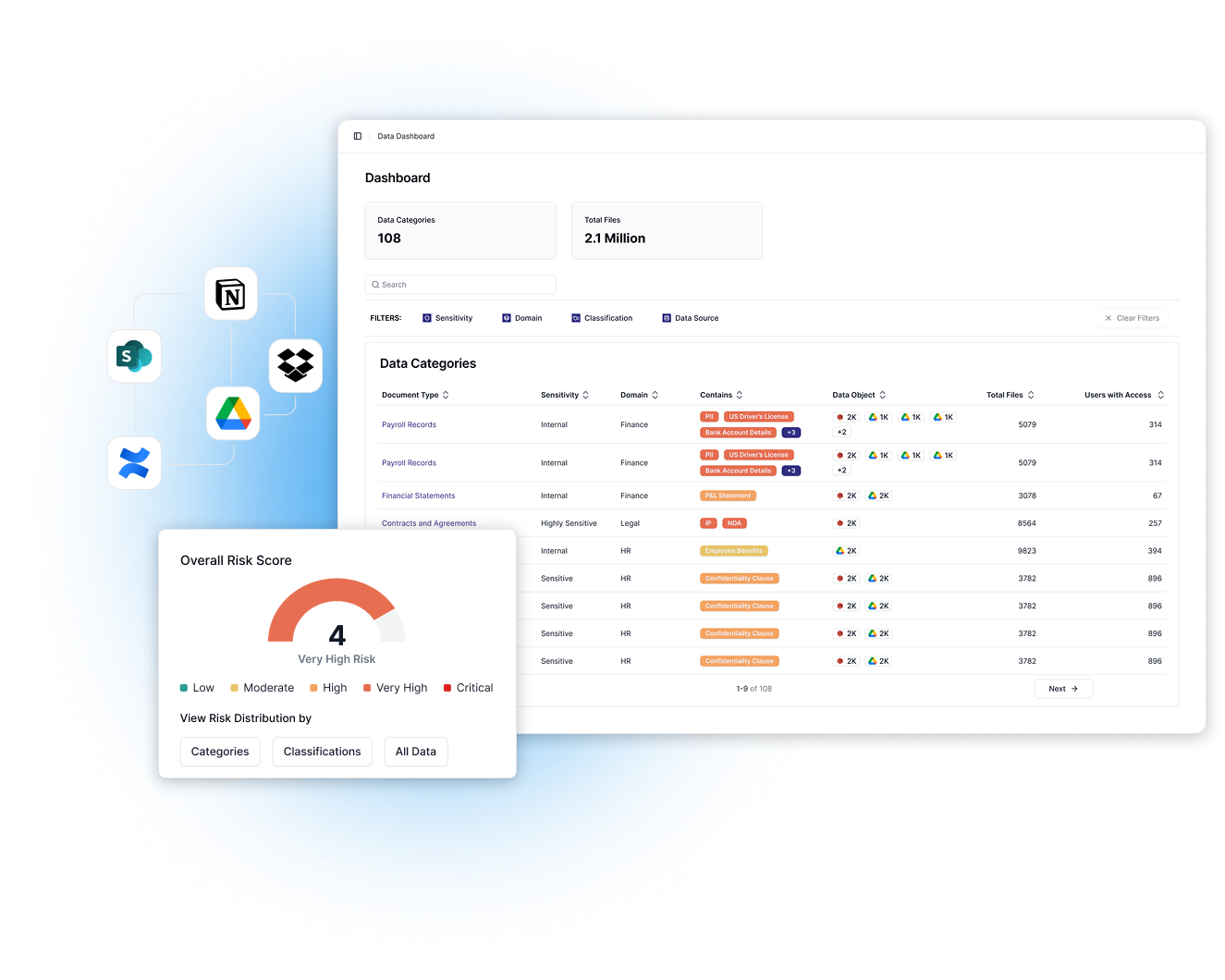

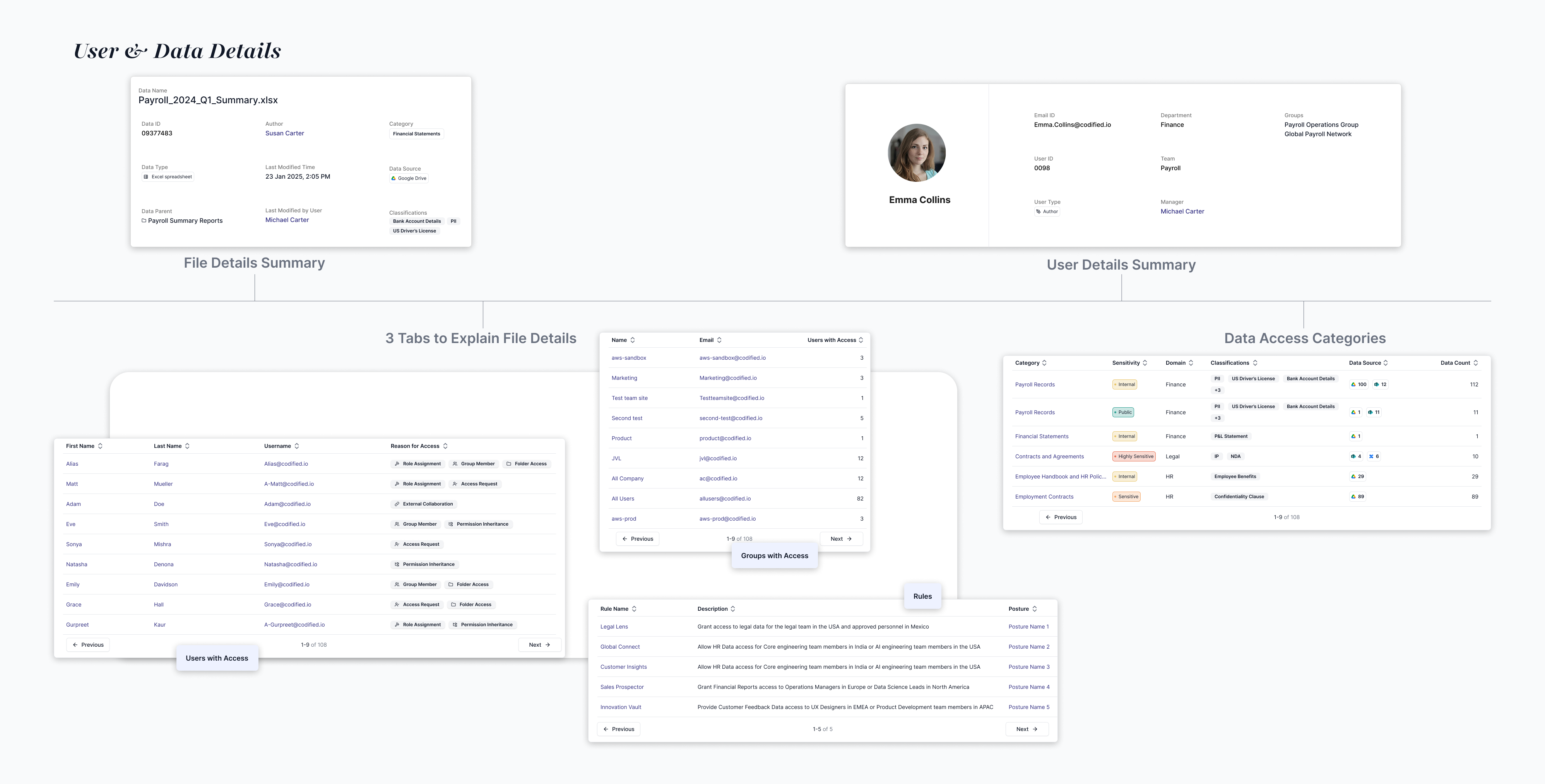

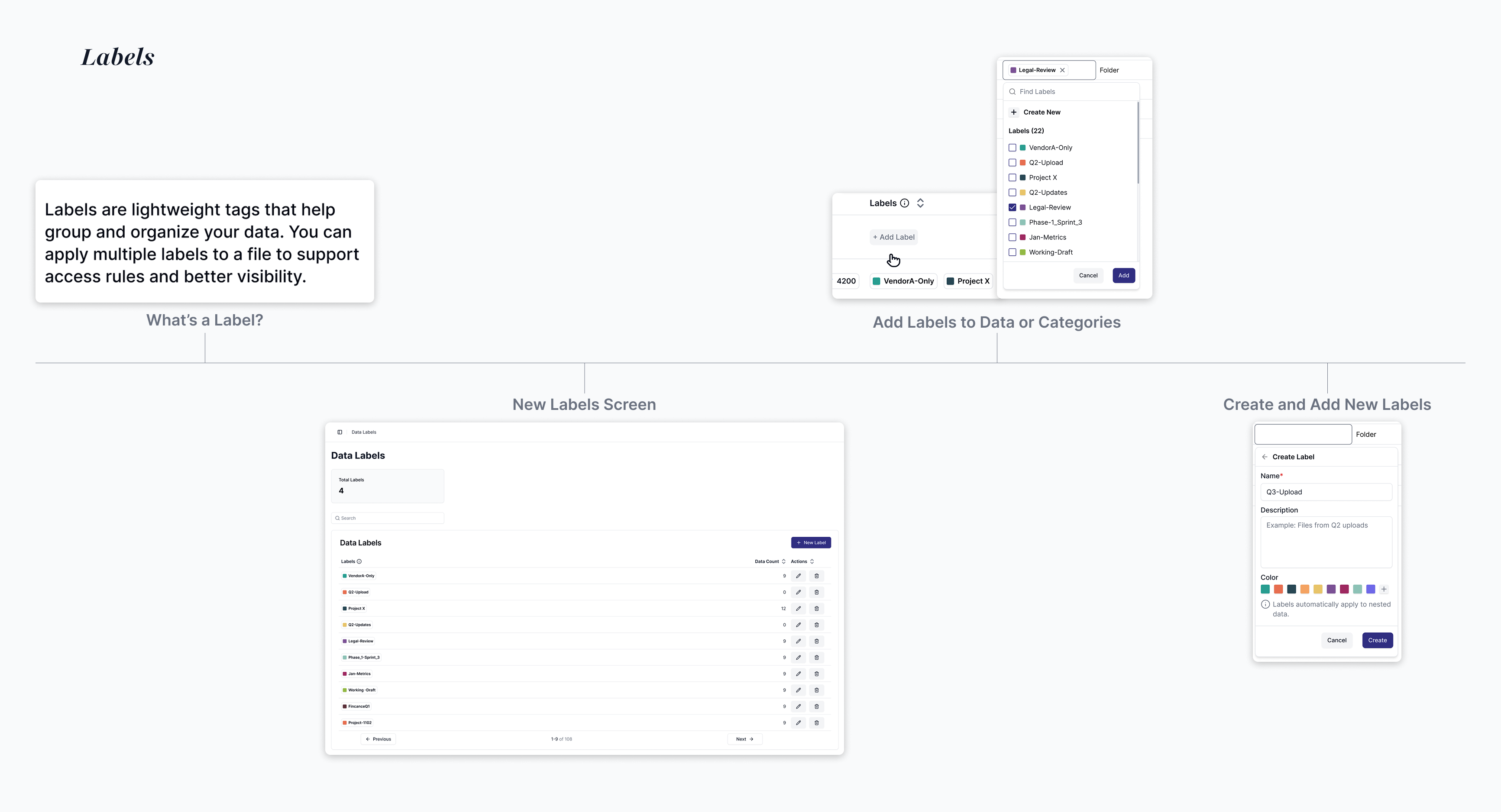

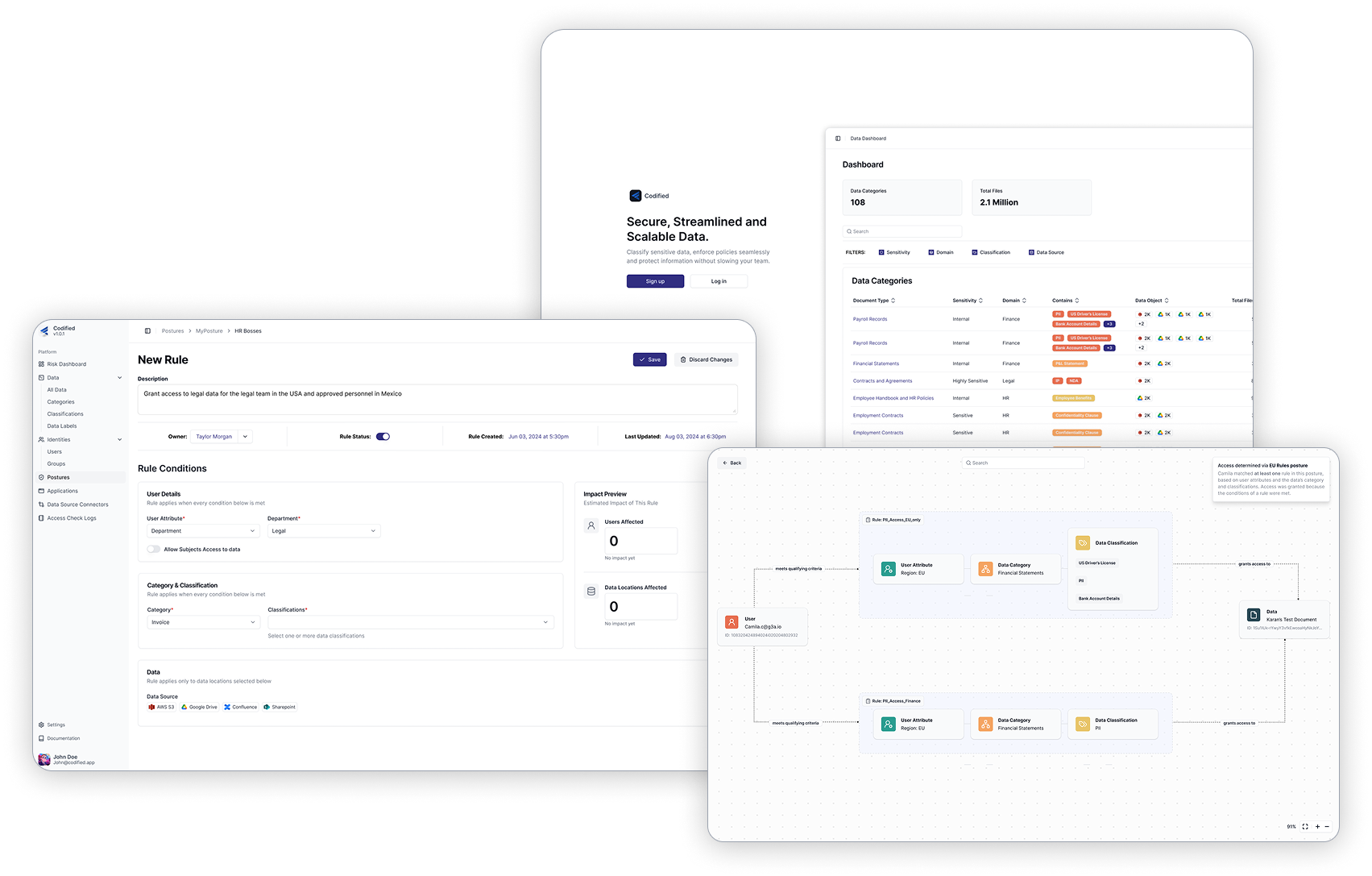

Codified auto-categorizes data and provides insights. Teams can create custom business-specific labels like “Board-level Confidential,” and apply them across hierarchies with inheritance, solving a major pain point by aligning access rules to the way enterprises actually think about their data.

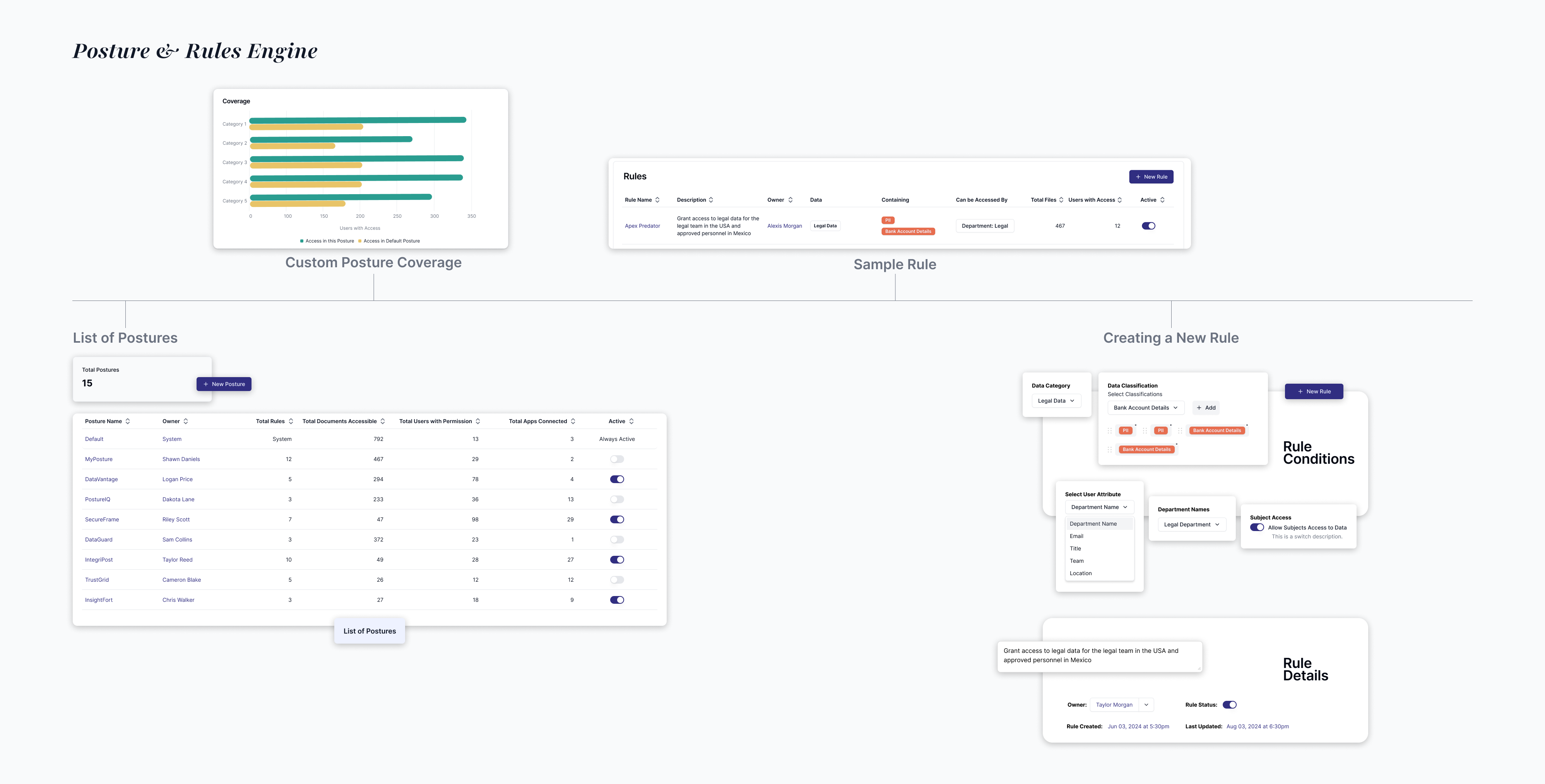

A rule creation flow that allowed teams to set access policies by combining user attributes with data categories, explore the full data hierarchy from folders down to individual files, and preview exactly which users and files would be affected, helping reduce the fear of breaking critical access and giving teams confidence before enforcing rules.

Allows security teams a single view to monitor their environment, surfacing an overall risk score, breaking down policy violations into intuitive charts and cards, and enabling quick drill-downs into specific issues for faster investigation and action.

Teams can search logs for any user and file, trace decisions through a visual graph of rules and attributes, and see plain-language explanations with recommended actions.

API-first integration flow that let developers query access decisions programmatically, returning both the decision and its reasoning.

❤️ Customers loved

Classification & Categorization: "This is the biggest gap in our current tools. The way you handle classification is exactly what we need."

API-First Integration: "I really like the API route, much better than the manual policy mess we deal with now."

Comprehensive Metadata Store: "It’s not just permissions, the fact that you capture rich metadata context too makes this way more useful than a traditional access control tool."

✅ Customers acknowledged

100% Problem Confirmation: Every POC participant validated that permission visibility and explainability were missing in their AI deployments.

Existing Tools Are Inadequate: "Current DSPMs are useless beyond surface-level scanning — they can’t tell us anything actionable."

Need for the Capability: Multiple stakeholders expressed intent to build, buy, or partner to acquire these capabilities.

Timing Urgency: Several teams had active implementation deadlines and requested extended evaluation periods to explore fit.

⚠️ Customers were wary of

Security Integration Concerns: There’s no way our infosec team will allow unrestricted scanning, this could block adoption."

Scalability Questions: "We have millions of documents. We’ll need to see real proof that this works at scale."

Architecture Philosophy Divide: Some stakeholders preferred solving the problem upstream (at the data source) rather than in the application layer.

Tool Fatigue: Security teams expressed hesitancy about adding “yet another tool” to an already crowded governance stack.

Over 9 months, we ran 4 enterprise POCs

.png)

50% demonstrated clear product fit with one additional POC showing positive signals

.png)

1 of 4 converted into a paid engagement validating early commercial interest despite being pre-revenue

.png)

Enterprise-scale validation tested on environments with 2M+ documents and 180+ employees

.png)

Support for 3 key data connectors prioritizing high-value sources based on early customer needs

.png)

Trust is a design problem

Enterprise AI adoption isn't limited by technology, it's limited by trust. The most sophisticated AI systems fail if decision-makers don't understand or trust how they work.

.png)

Validation Comes in Many Forms

Customer enthusiasm, pilot programs, and design pattern adoption across an industry are all forms of validation, even when they don't immediately translate to sustainable business outcomes.